OpenStreetMap in the age of Spark

Update: Telenav publishes weekly planet Parquet files at osm-data.skobbler.net

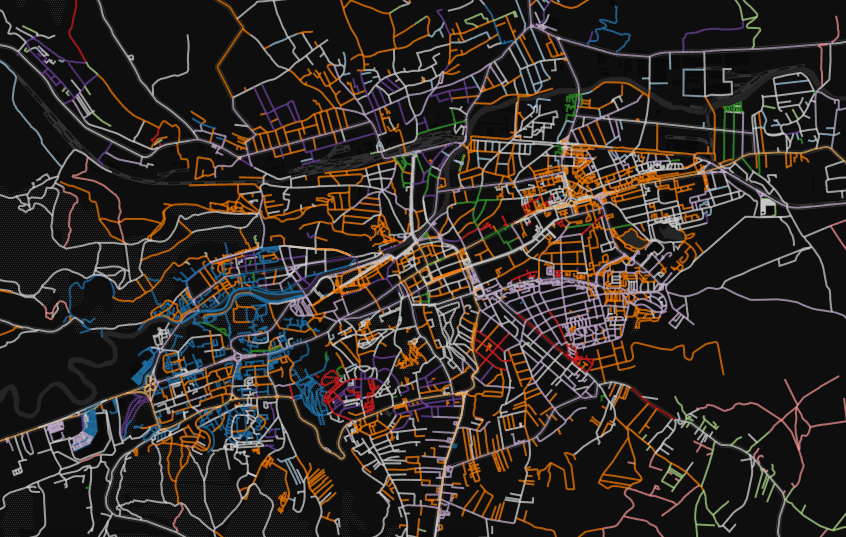

OpenStreetMap is an open/collaborative map considered the Wikipedia of maps. There are tons of information available online and even books about it… anyway, not the history of OSM we will discuss here, but rather we will take a look on the impressive dataset behind and how such a big map can be analysed with modern technologies as Apache Spark.

Short anatomy of the OpenStreetMap

One of the reasons OSM got where it is now, is it’s quite simple data model. There are only three types of entities:

- nodes: a geo-localized point on the map

- ways: roads, building contours, borders (collections of nodes), …

- relations: highways (multiple ways), schools (building contours), …

Each entity can be tagged (for example, a way tagged with the highway=residential marks that the way is a road and the road type is residential).

Also meta information about who and when added each entity is kept.

How big the OpenStreetMap actually is?

The data is available at https://planet.openstreetmap.org and it comes in two formats:

- XML ~ around 53 GB

- PBF ~ around 34 GB

Take a look at OSMstats, if interested on how the map evolves on a daily basis.

Is OpenStreetMap Big Data ready?

When I first started to work with OpenStreetMap (mid 2015) I was a bit intrigued to see people waiting hours or even days to get a piece of OSM imported in PostgreSQL on huge machines. But I said OK … this is not Big Data.

Meanwhile, I started to work on various geo-spatial analyses involving technologies from a Big Data stack, where OSM was used and I was again intrigued as the regular way to handle the OSM data was to run osmosis over the huge PBF planet file and dump some CSV files for various scenarios. Even if this works, it’s suboptimal, and this made me write a converter to Parquet.

The converter is available at github.com/adrianulbona/osm-parquetizer.

Hopefully, this will make the valuable work of so many OSM contributors easily available for the Big Data world.

How fast the conversion is?

Less than a minute for romania-latest.osm.pbf and ~3 hours (on a decent laptop with SSD) for the planet-latest.osm.pbf.

Demo

If you are curious about how these Parquet files look like and how you can query them with Spark SQL, take a look at this databricks notebook.